Exploring Wyvern Open Data On Metaspectral Fusion Platform

on Mon May 12 2025

Guillaume

Recently, Wyvern announced the launch of its Open Data Program, providing free access to a collection of high resolution visible and near-infrared (VNIR) hyperspectral images. The images from its Dragonette-001 satellite have 23 bands in the 500-800 nm range, with an impressive spatial resolution at nadir of 5.3 m. Hyperspectral imaging is one of the most promising technologies to advance earth observation across many industries including agriculture, forestry, mining, oceanography and security. This method of imaging offers distinct advantages over conventional multi-spectral imaging as it has the capacity to detect subtle biochemical and biophysical variations in land cover.

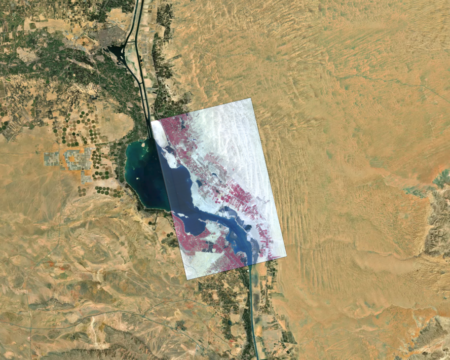

Today we are exploring one of Wyvern’s images from the Suez canal in Egypt using Metaspectral’s unique cloud-based Fusion platform. Fusion was specifically designed to handle the vast amount of data coming from hyperspectral imagery and to process it using state-of-the-art machine learning and deep learning algorithms. Let’s dive into it!

Upload & Explore Images

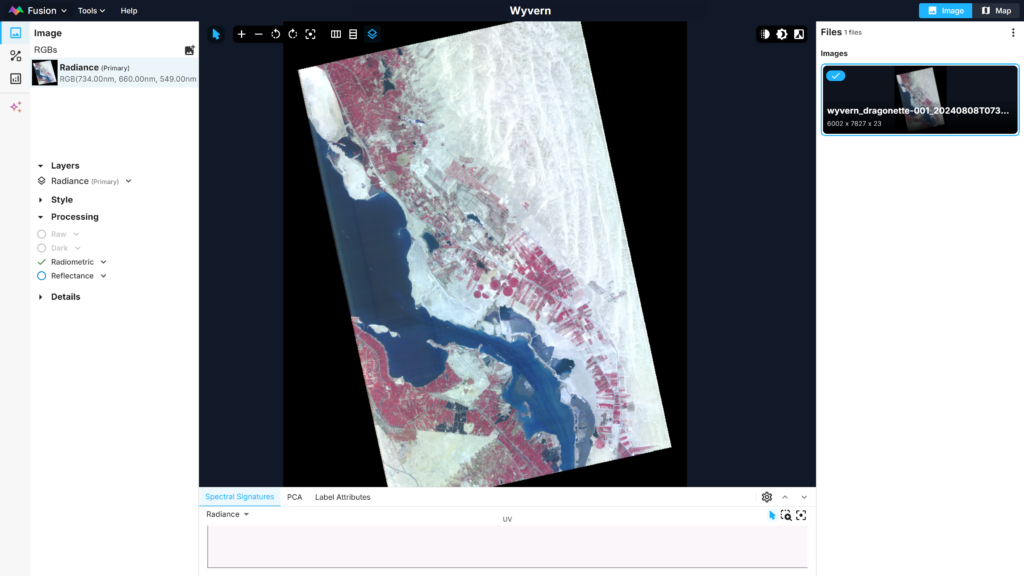

Fusion runs entirely in your browser, so just drag and drop the image to upload it on the platform. The image is in radiance (W/sr⋅m2⋅µm) with nearly 50 megapixels. It shows a portion of the Great Bitter Lake where several ships are navigating.

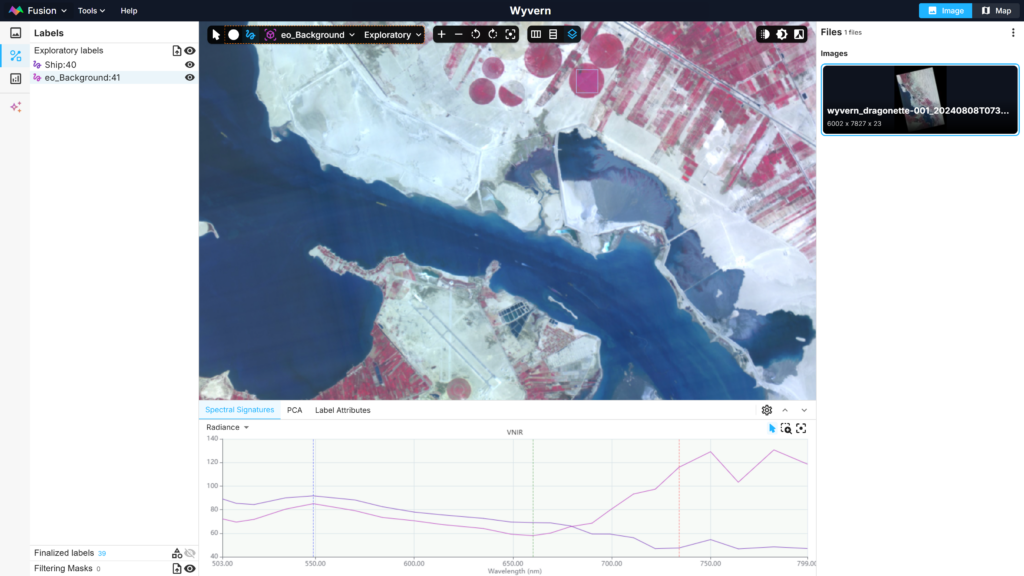

After labeling some ships and some crops, distinct patterns between the two spectral signatures are visible. Indeed, the crop spectral signature shows the characteristic increase in the red edge region (600 to 800 nm) while the ship signature shows actually the opposite trend.

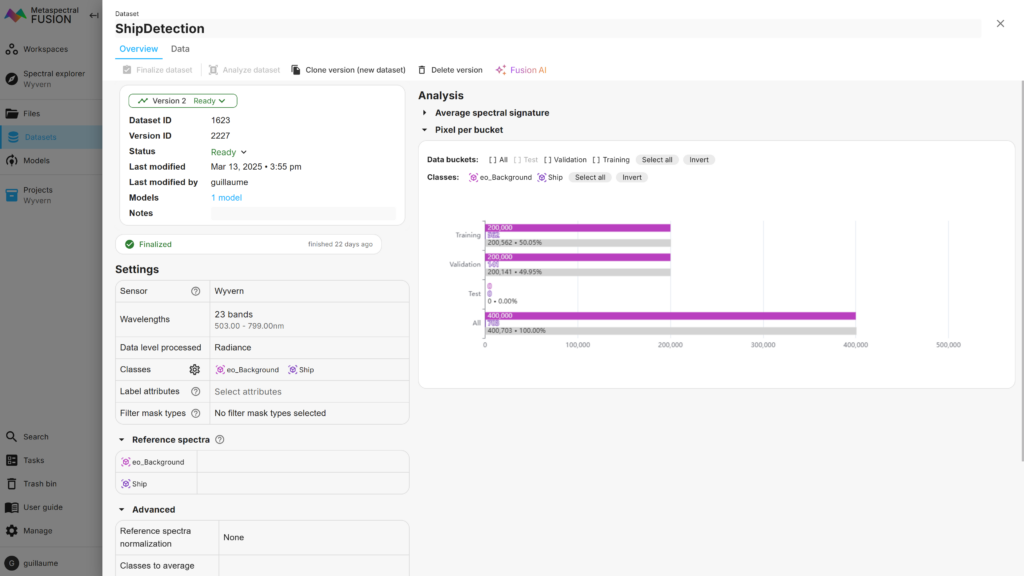

Build Dataset & Model

After exploring the image, it’s time to build a target detection model using deep learning to identify ships navigating in the Suez canal based on their spectral signature. This is achieved in Fusion in two quick steps. First, we built a dataset using the labelled areas in the images. Since we are interested in target detection, only two categories of labels were created: ships and background (anything that is not a ship). Note that a dataset could also be created directly from a single reference spectrum from a spectral library, for instance. In that case, a dataset is created from synthetic pixels generated from the reference spectrum.

As seen below, the dataset shows that 562 and 141 ship pixels were used for the training and validation sets, respectively, while 200,000 background pixels were used for both.

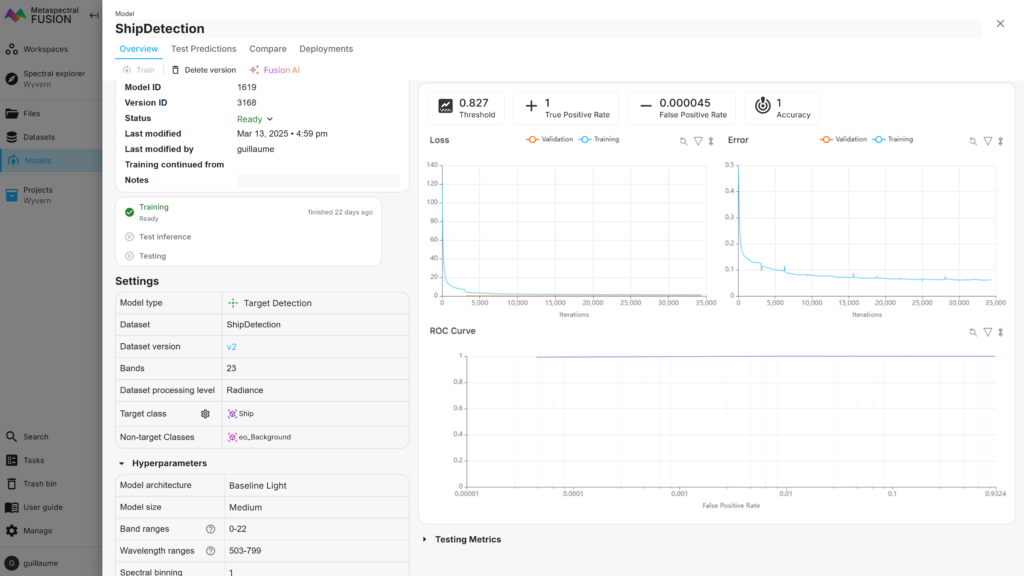

Next, we train a custom ship detection deep learning model based on the dataset that was created previously. As can be seen below, the model identified all ship pixels correctly and achieved a False Positive Rate of only 0.000045.

Visualize Results

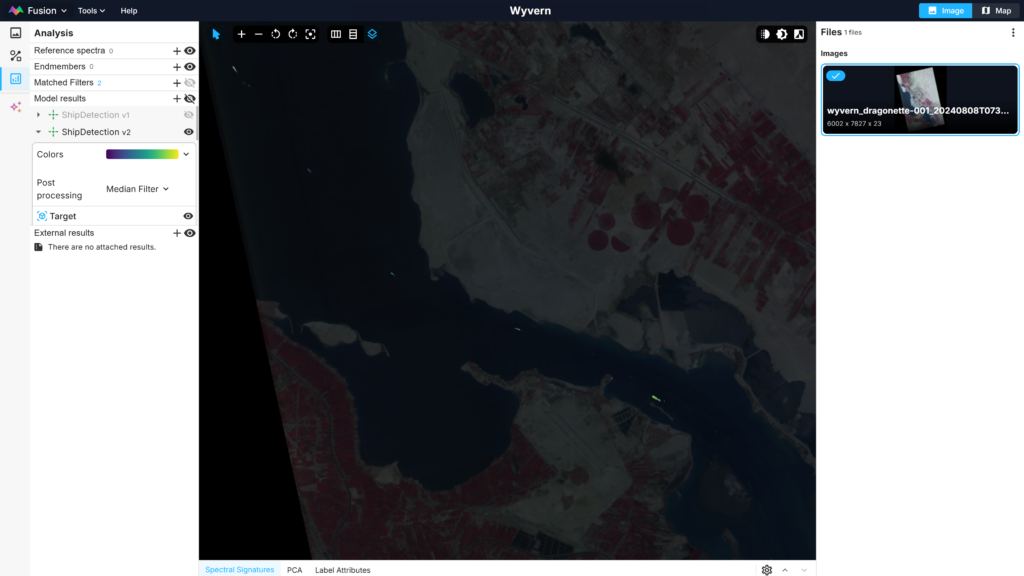

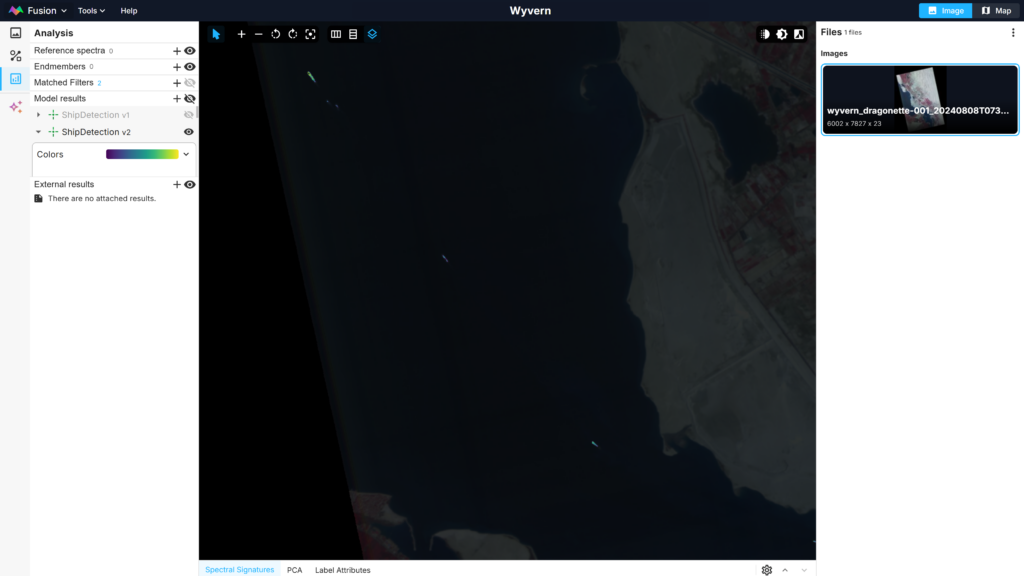

Back in the Fusion Explorer view, the model inference can be visualized. Here the results were overlaid on top of the original image with a slight degree of transparency. A number of ships navigating the canal, including the smallest ones that were not initially labelled, can now be clearly distinguished in yellow-green tint.

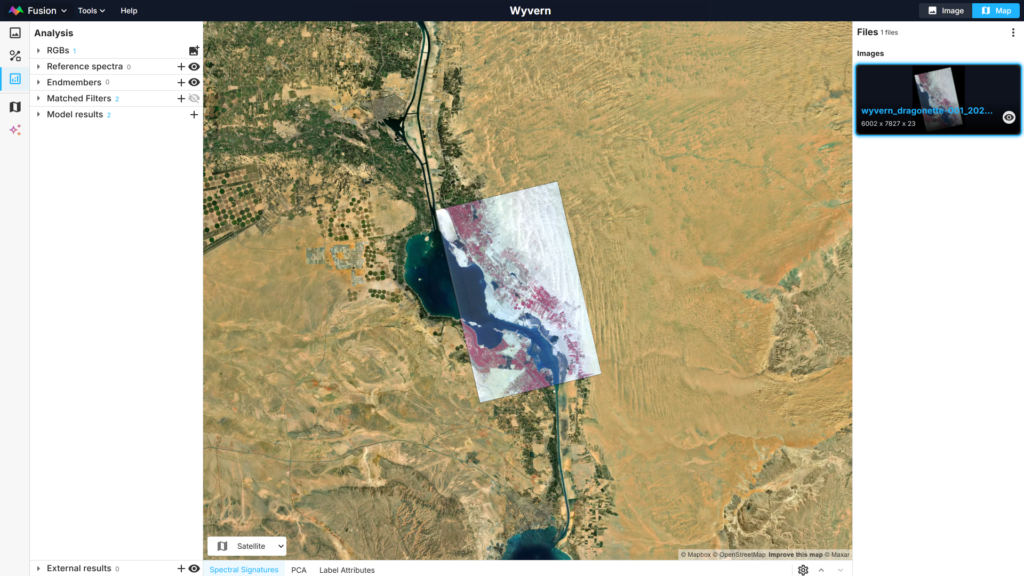

Of course, for images that are georeferenced, the same images and results can also be visualized on top of a base map.

Take it to the Next Level

Because the Fusion platform is coupled with large cloud computing resources, it has unparalleled capabilities for analyzing large datasets consisting of hundreds of hyperspectral images in a straightforward and scalable manner. Classification, regression, target detection and spectral unmixing models are available on the platform. Fusion can efficiently analyze a substantial amount of data and provide actionable insights from hyperspectral images. It is perfectly suited for both your remote sensing and industrial hyperspectral needs.