Sub-pixel level Deep Learning, real-time analysis, and hardware-accelerated data compression and streaming.

Compatible with any hyperspectral or multispectral sensor, whether in orbit or on Earth.Sub-pixel Level AI

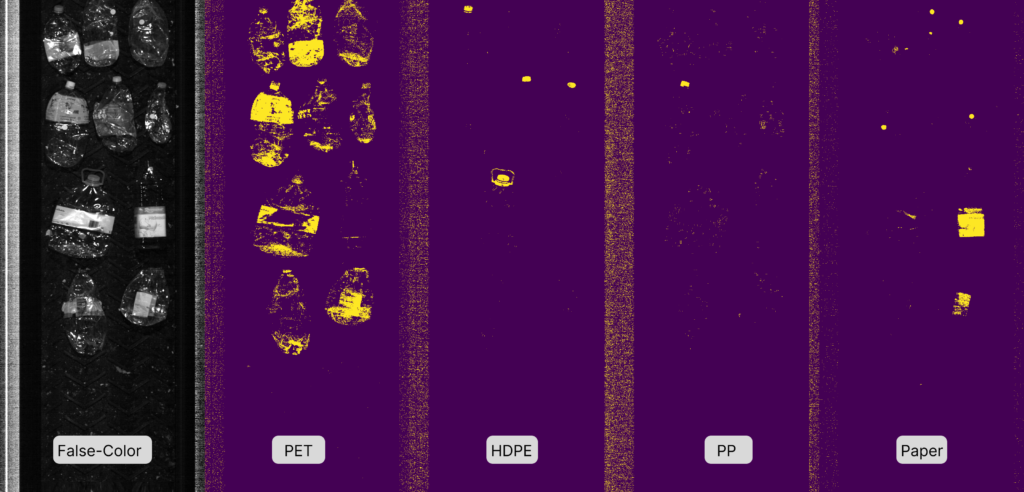

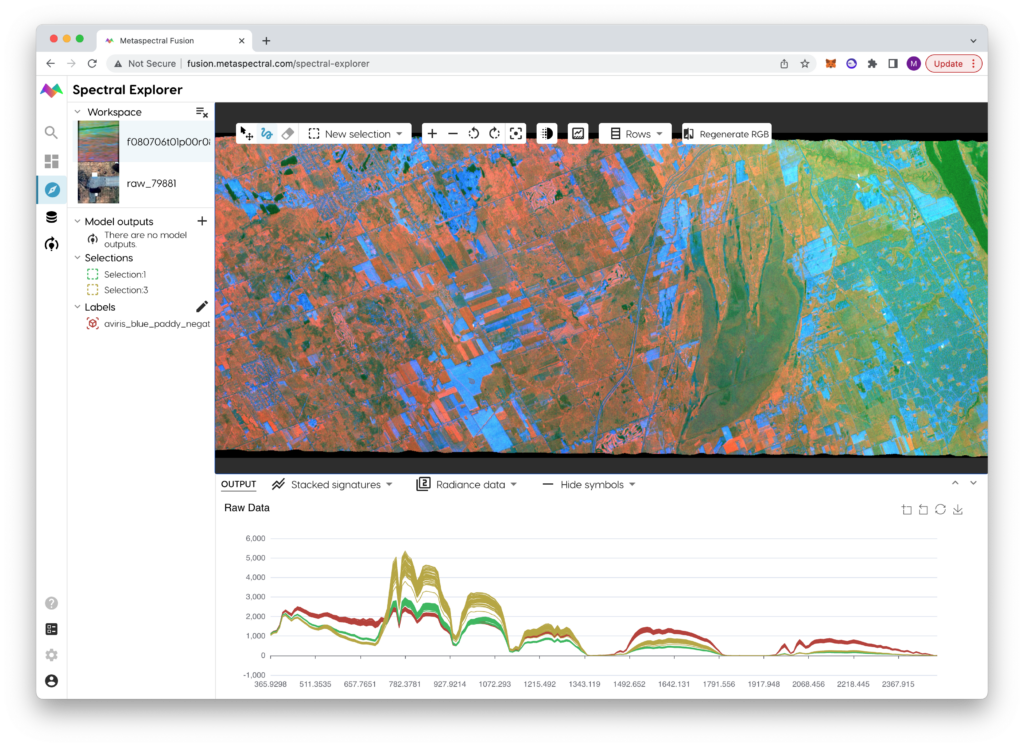

Spectral unmixing with Deep Learning

Deep Neural Networks

Deep Neural Networks are at the core of data analysis performed on Metaspectral's Fusion platform. Fusion supports training with various neural architectures, including ResNets, Convolutional Neural Networks, as well as Autoencoders.

Custom Loss Functions: Spectral Loss

Peer-reviewed by Defence Scientists in Canada (DRDC), Metaspectral has developed custom loss functions to train deep neural networks. These custom loss functions address instability, convergence and spectral unmixing, and provide the backbone to Fusion's advanced spectral analysis algorithms.

Rare Target Detection

Detecting rare-targets are complicated, due to a lack of labelled data and samples "in the wild". Metaspectral's Fusion uses synthetic data generation processes to train target detection neural networks, enabling AI models to be trained using just a single spectral signature. Our algorithms outperform classical approaches, such as Adaptive Cosine Estimation (ACE), by up to 100x in minimizing false-positives.

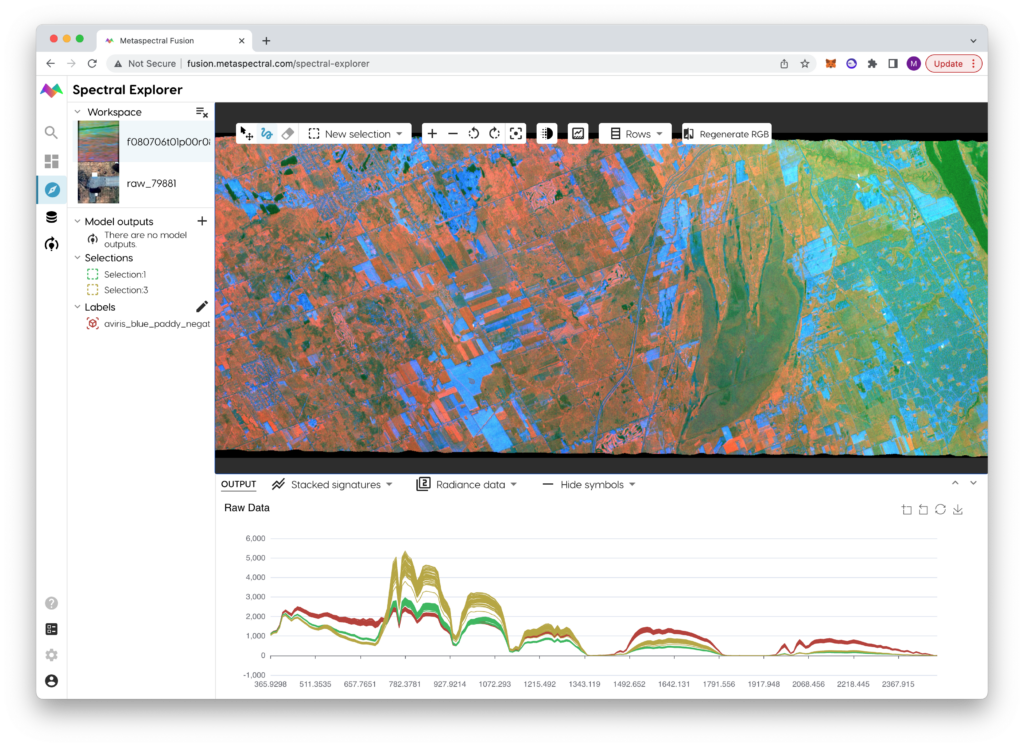

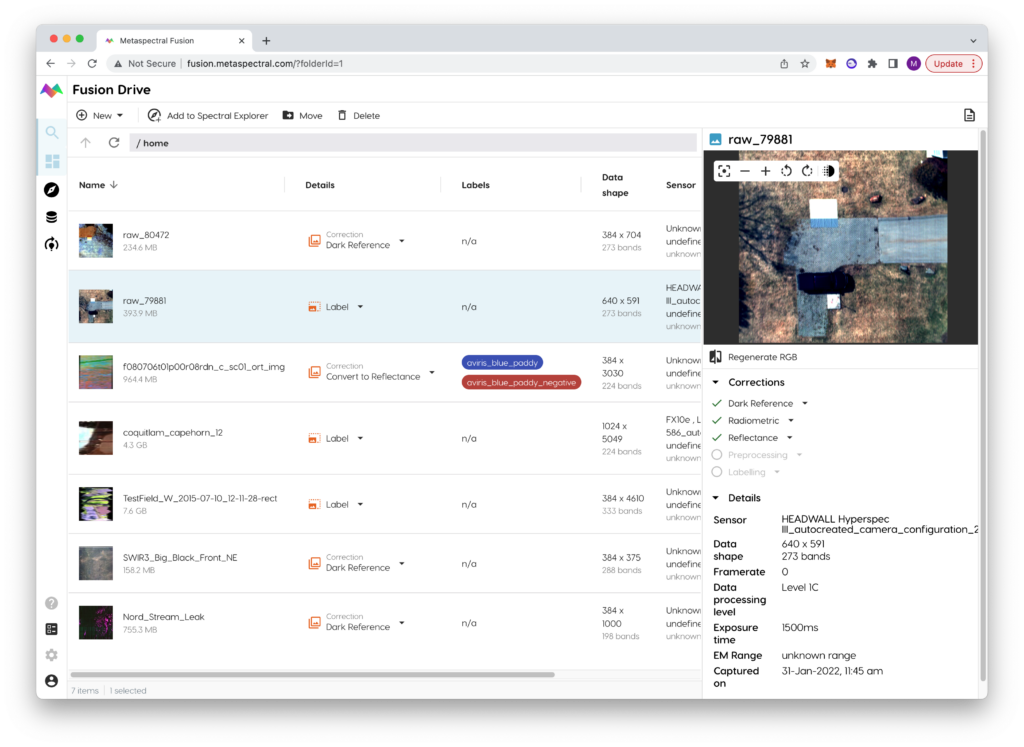

Hyperspectral and Multispectral Data Management

Advanced Data Compression and Streaming

CCSDS-123-b-2: Lossless and Near-Lossless Data Compression

The Consultative Committee on Space Data Systems (CCSDS) is a consortium made of national space agencies including NASA, CSA and ESA. The CCSDS-123-b-2 standard consists of purposely-built algorithms for lossless and near-lossless compression of hyperspectral data. It is integral to Metaspectral's Fusion platform, facilitating streaming and sharing of hyperspectral data across organizations. A typical 1 Gbps data stream is gets losslessly compressed down to ~350 Mbps.

Hyperspectral Data Streaming

An industry first, Metaspectral has developed the first protocol of its kind to stream hyperspectral data. It enables hyperspctral data to be analyzed in real-time, pixel-by-pixel, as it is being captured.

Hardware Acceleration

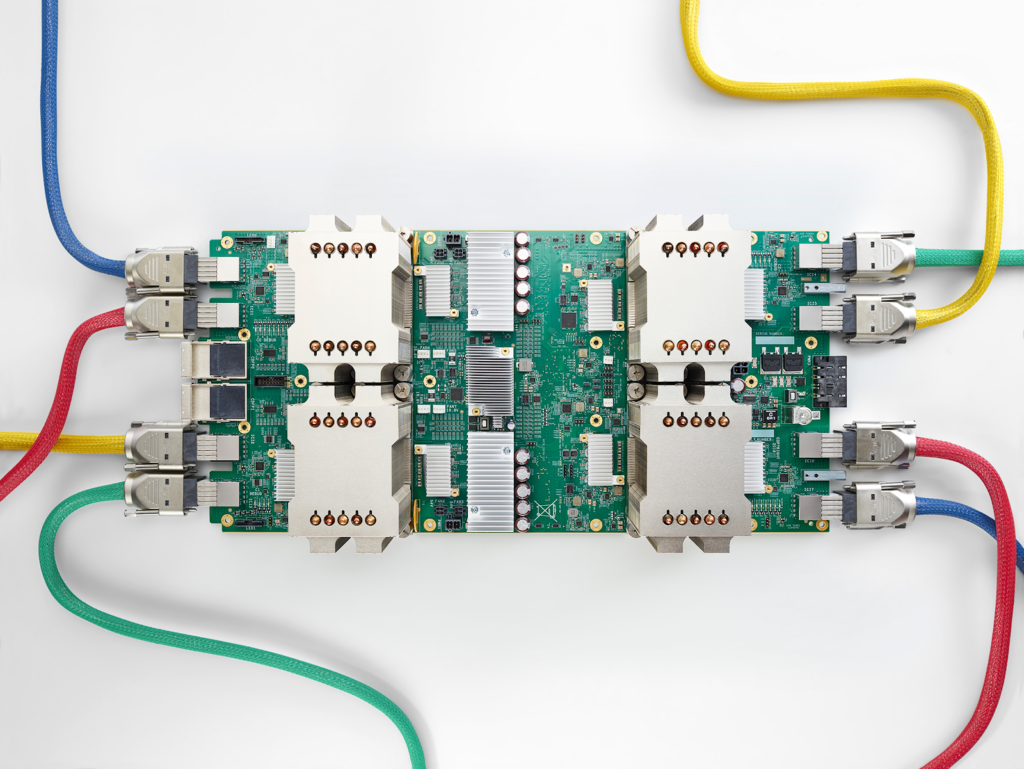

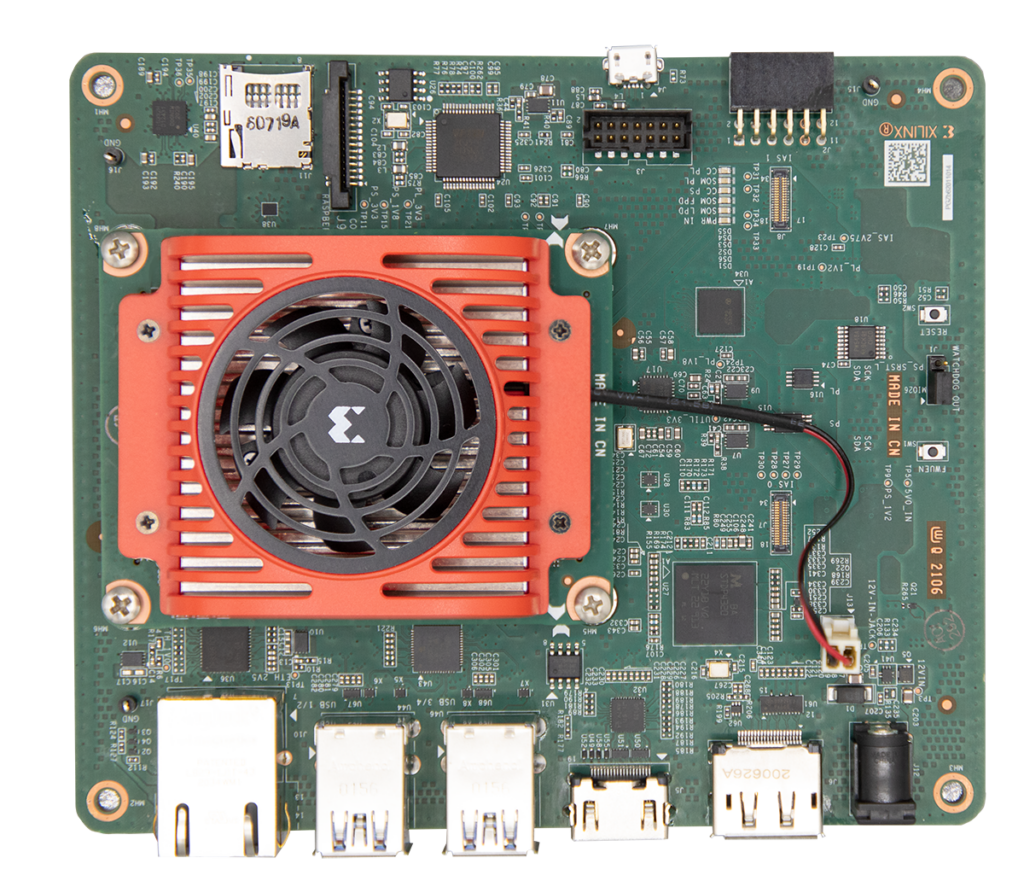

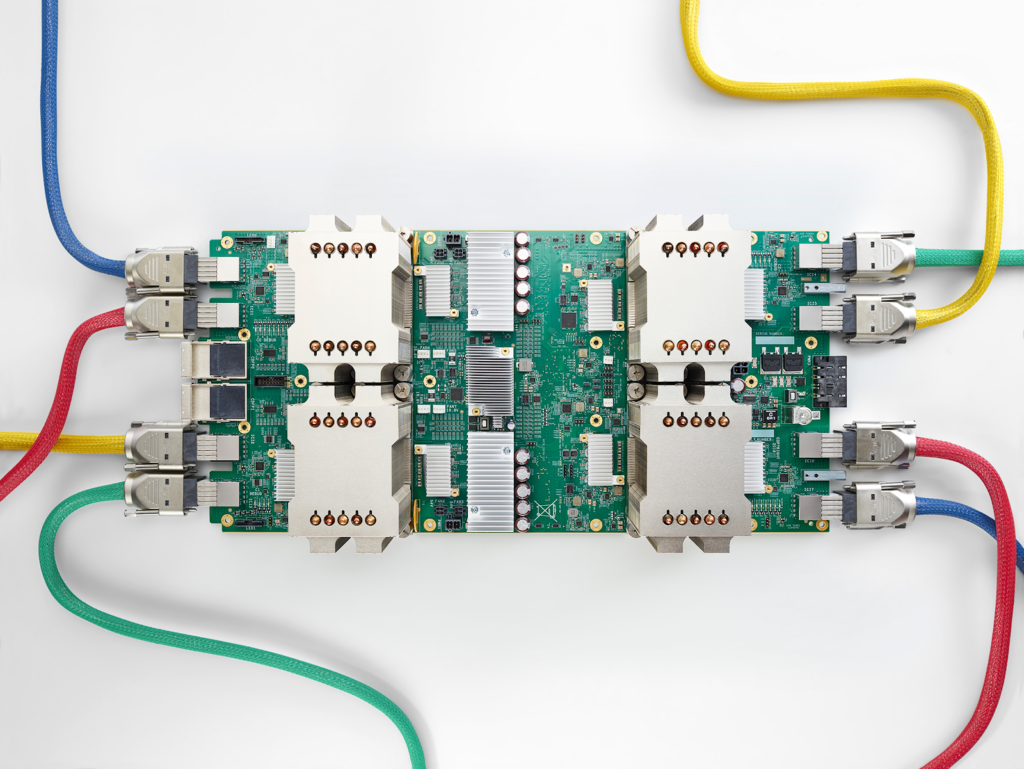

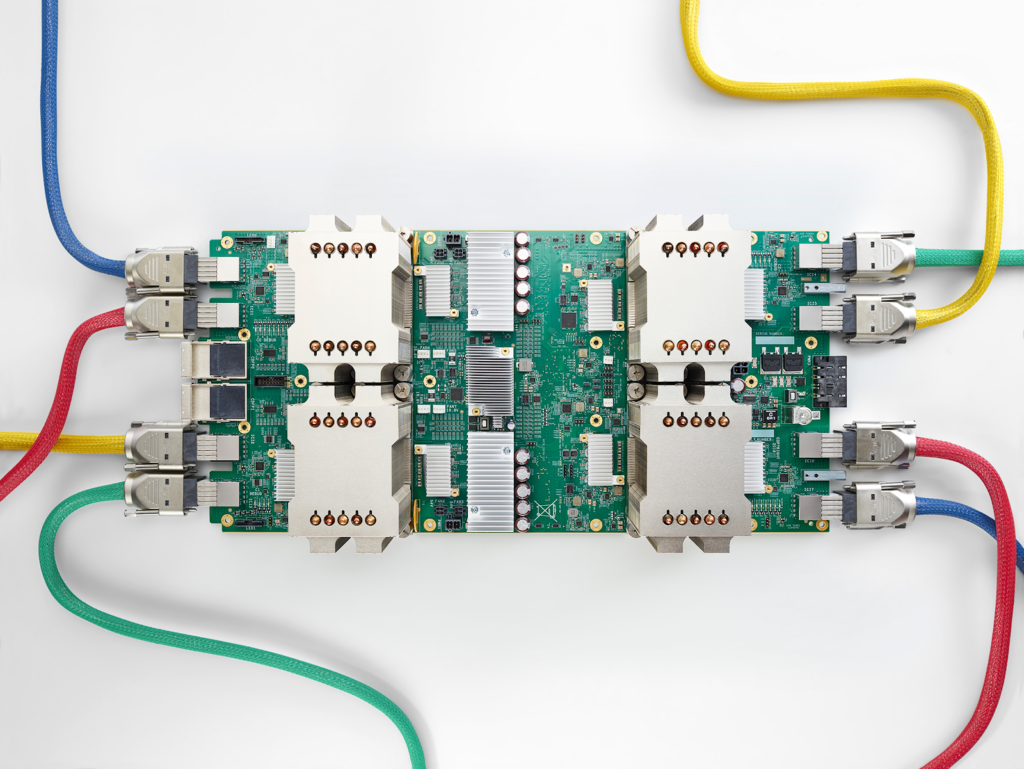

FPGA and TPU Accelerated Computing

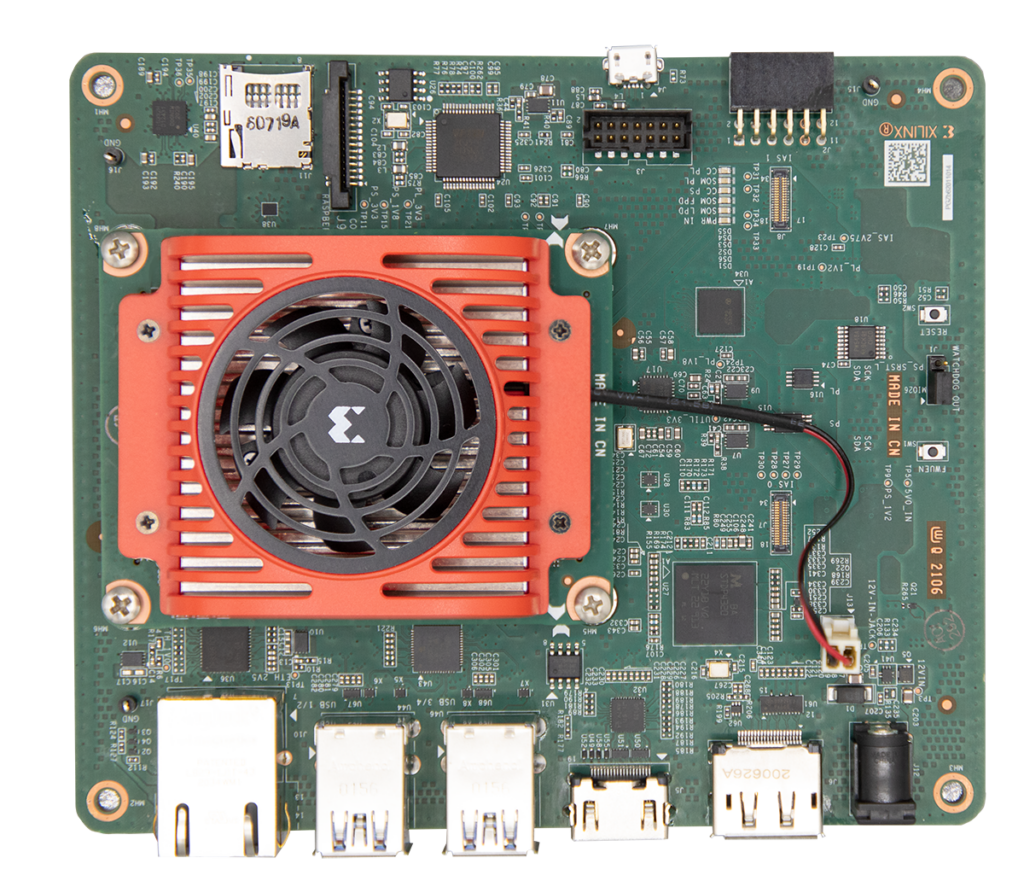

FPGA Accelerated Data Compression & Streaming

FPGA IP Cores are capable of ingesting and processing hyperspectral data at over 37 Gbps. While manufacturer agnostic, they have been use in a variety of products including those from Xilinx and Microsemi.

TPU Accelerated Deep Learning Training

The backend of Metaspectral's Fusion platform backend is built for Google's Cloud Platform (GCP). AI model training jobs can be scheduled to run either on GPUs or TPUs. TPUs provide unparalleled acceleration to AI training jobs, and are perfectly suited for data-heavy products such as hyperspectral data processing.

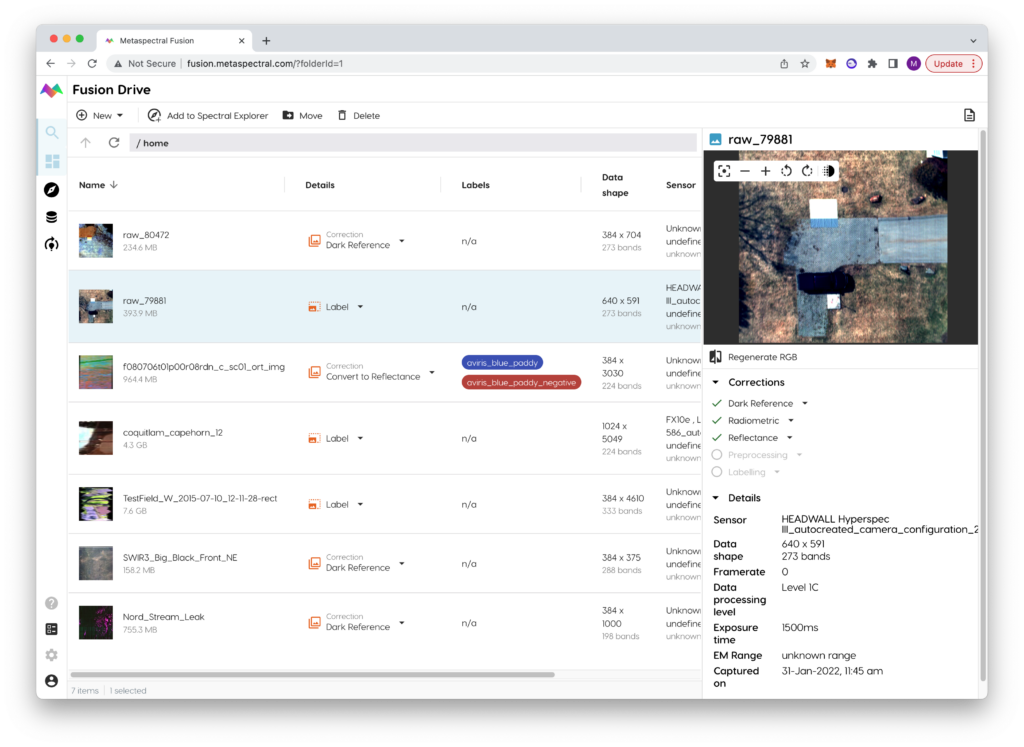

Manufacturer Agnostic

Any camera, any platform

Manufacturer Agnostic

Metaspectral's Fusion platform is able to interface with any camera supporting GigE Vision or CameraLink protocols. As virtually all camera manufacturers support either of these protocols, Fusion is platform agnostic to the camera manufacturer.

Any Sensor Platform

Metaspectral’s Fusion platform can process data from sensors that are terrestrial, airborne, or in orbit.

Satellite Data Feeds

Support for commercial hyperspectral satellite data feeds is baked into Metaspectral's Fusion platform. Get in touch to learn more.